Data democratization enables data to be accessible and understandable to everyone within an organization. However, despite years of investment in data lakes, analytics tools, and isolated AI pilots, most enterprises still struggle to turn information into everyday advantage. High-quality data and advanced models remain firmly locked behind specialist teams, creating bottlenecks that slow decision-making and leave frontline employees flying blind in a market where speed is a matter of survival.

This issue can be solved through a pragmatic four‑part roadmap:

Enterprises that execute this agenda report up to 3× faster product‑iteration cycles, a 20 % reduction in operational costs, and a 5–10 % revenue uplift within eighteen months—proof that opening the gates to data and AI unlocks real, measurable value.

Over the past decade, organizations have poured millions into data lakes, dashboards, and AI proofs-of-concept, yet insight remains scarce at the edge. Data is trapped in functional silos, access mediated by overstretched specialists, and experimentation queues stretch for weeks.

RAND and Gartner estimate that 80 % of AI projects fail and only 30 % progress beyond pilot, all symptoms of poor data quality, limited reach, and fragile ownership models. Meanwhile, oceans of raw information—customer behavior, supply-chain signals, machine telemetry—lie dormant. Consequently, product teams are deprived of the resources they require for rapid iteration. This leaves executives to steer with partial visibility.

Bottom line, data has become an abundant but inaccessible raw material, forced into scarcity by organizational architecture rather than physics.

That inertia is becoming untenable. McKinsey’s 2024 State of AI survey shows enterprise adoption leaping to 72%, with65 % of companies already using GenAI in at least one business function.

Here’s how the current dynamics look:

In this new order, waiting days for a central data team to run a query can mean missed market windows and strategic blind spots.

The antidote for all of this is true data democratization. In other words, driving initiatives directly from the CTO Office that open trusted data sets and governed AI workbenches to everyone who can turn insight into impact.

Think of it this way: What do you get when you converge secure infrastructure, self-service platforms, upskilled talent, and a curiosity-driven culture?

You end up with three outcomes:

The reality is that data democratization is no longer a side project; it is the operating system for the enterprise in the Gen AI era. It enables cross-functional teams—from finance analysts building forecasting bots to marketers refining campaigns on the fly—to solve problems at the speed of thought and innovate responsibly.

Before any roadmap can gain traction, technology leaders need a cold-eyed view of what is already in place—and what is missing. A structured diagnostic should cover three critical areas:

Essentially, there are two “pains”:

Commercial, operations, and product teams complain of week-long request queues, resorting to spreadsheet extracts and gut-feel decisions. They see analytics as a black box that delivers late or not at all, undermining trust and blunting agility.

Meanwhile, centralized data engineers and data scientists face an endless backlog of ad-hoc tickets, constant context-switching, and escalating compliance risk. They spend more time policing access and firefighting pipeline issues than innovating.

The diagnostic’s goal is not to assign blame but to create a single, evidence-based baseline that both sides recognize. When framed this way, data democratization ceases to be a lofty ideal and becomes a pragmatic response to clearly documented friction. It sets the stage for the strategic roadmap that follows.

When every new feature or hypothesis must wait in a queue for scarce data-science talent, product iteration grinds. A survey of 750 enterprises found that half need up to 90 days just to push a single machine-learning model into production, and 18% take even longer. Talking about a crippling delay in markets that refresh weekly, right?

In the absence of governed, self-service analytics, employees build their own “islands” of insight: rogue SaaS tools, local BI apps, and—still the perennial favorite—Excel sheets passed around by email.

Recent research shows 90% of organizations still rely on spreadsheets for mission-critical data, despite plans to automate. The result is conflicting versions of the truth, hidden compliance risk, and data that never feeds AI pipelines.

Take a moment and reflect on your organization’s practices. Does it fall into the group of 90% that still use spreadsheets? If so, you need to step up and drive the change.

Expertise becomes a bottleneck when access to models and deployment pipelines is restricted to a small, over-extended elite.

According to a 2024 industry survey, only 22% of data scientists say their “revolutionary” models usually make it into production, while 43% report that most of their work never sees daylight. Business stakeholders lose visibility and confidence, reinforcing a vicious cycle of centralized control and limited impact.

Individually, these symptoms sap speed. But together, they signal a systemic barrier to value realization. Recognizing them early provides the incentive—and the evidence—to pursue enterprise-wide democratization of data.

NOTE: Each step includes objectives, success criteria, and quick‑win tips.

A scalable, governed data layer is the foundation of every other democratization effort. Whether you adopt a lakehouse, data mesh, or data fabric pattern, the goal is the same: expose high-quality, trusted data to every authorized user without sacrificing security or compliance.

A unified governance plane—catalog, lineage, access controls, and privacy tooling—binds the architecture together so that insight moves freely while risk stays contained.

Establishing such a foundation transforms data from a guarded commodity into a shared utility, setting the stage for self-service analytics, low-code AI, and, ultimately, enterprise-wide innovation.

| KPI | Target | Why It Matters |

| Catalog coverage | ≥ 90% of critical tables & objects | Ensures users can actually find data. |

| Time to onboard a new dataset | < 1 day | Measures the agility of the ingestion pipeline. |

| Certified-data adoption | ≥ 70% of analytical queries hit governed sources | Indicates trust and reduced shadow copies. |

| Policy-violation rate | < 1% of access requests flagged | Validates controls without throttling innovation. |

To avoid regulatory fines, brand reputation damage, and stalled adoption, technology leaders must add robust governance to their modern architecture. This practice translates abstract principles (i.e., ethics, privacy, and compliance) into enforceable policies and, more importantly, clear accountability. If done well, it accelerates access by giving stakeholders confidence that the right guardrails are always in place.

| KPI | Target | Why It Matters |

| Written policies mapped to data/model tiers | 100% of critical assets | Eliminates ambiguity; speeds approvals |

| Time to approve a new data-access request | < 4 hours | Signals frictionless yet controlled access |

| Models with automated bias & drift tests | ≥ 90% in production | Demonstrates ethical compliance at scale |

| Audit issues flagged in the last review | 0 material findings | Validates controls and reduces regulatory risk |

All of this might sound as too much to handle, perhaps even unnecessary, or even as a break on innovation. It is not. Clear governance is a traffic system that lets every team move quickly and safely on the same road. It’s a map that eliminates wrong turns.

Self-service tooling turns every knowledge worker into a potential “citizen data scientist.” The “plumbing” hides in modern BI (Business Intelligence), AutoML, and low-code/no-code platforms. Business experts can ask questions, build models, and embed insights without idling in an IT queue. Bottom line, this “plumbing” accelerates adoption.

A recent Gartner survey found an 87% jump in employees using analytics and BI inside the same organisations, while LCNC suites can shrink application development time by up to 90%.

AutoML case studies confirm the speed gains. For instance, Consensus Corp cut model-deployment cycles from 3–4 weeks to just 8 hours.

However, to capitalize on these advances, tech leaders must design a clear enablement playbook.

| KPI | Target (first 12 months) | Why It Matters |

| Active self-service users / total potential users | ≥ 50% | Signals broad reach beyond specialist teams |

| Average analytics request turnaround | < 1 hour (was days) | Measures friction removed from the decision flow |

| Citizen-built models promoted to prod | ≥ 10 per quarter | Proves AutoML is creating deployable value |

| Time to embed a new insight/API into a product | < 2 sprint cycles | Confirms platform openness for dev teams |

| Governance violations from self-service actions | Zero critical | Demonstrates “freedom within guardrails” |

Remember, it is vital to lower the technical barrier and keep governance invisible but firm. Soon, your organization will convert pent-up curiosity into a continuous stream of data-driven micro-innovations that compound over time.

A world-class platform is useless if people can’t—or won’t—use it.

Building enterprise-wide skill and confidence requires a structured, incentivised program that moves employees up the data literacy ladder and turns early enthusiasts into full-blown citizen data scientists.

Hence, the

| KPI | Target (first 12 months) | Rationale |

| Workforce at Awareness level | ≥ 70% | Reflects broad reach; 86% of leaders now see literacy as critical daily work |

| Workforce at Proficiency level | ≥ 25% | Creates a core of self-service power users |

| Certified citizen data scientists | ≥ 5% of headcount | Meets growing demand; 41% of firms already run citizen-dev programmes |

| Data-driven OKRs adopted | 100% of product & commercial teams | Aligns incentives with behaviour change |

| Decision-making efficiency uplift | Proof of ≥ 20% faster cycle time vs. baseline | Mature training programmes drive decision efficiency to 90% |

The bottom line is that you want to treat skills as a product, with a clear roadmap, success metrics, and recurring releases. By doing so, you convert curiosity into competence and create an internal talent engine that scales with your data and AI ambitions.

The following examples illustrate how objectives link directly to measurable, time-bound outcomes that track both adoption (behavior change) and tangible business impact.

| # | Objective | Key Results |

| 1 | Accelerate decision-making through self-service analytics | 1. Cut average request-to-insight time from 3 days to under 4 hours. 2. Reach 50% active adoption of the BI self-service portal across commercial and product teams. 3. Shrink the central data team ticket backlog by 70% without increasing headcount. |

| 2 | Improve forecast accuracy with citizen-built ML models | 1. Train and promote ≥ 3 AutoML models—built outside the data-science team—into production for demand, churn, and pricing forecasts. 2. Reduce quarterly demand-forecast variance from ±8% to ±3%. 3. Attribute ≥ €2 million in incremental margin to forecast accuracy gains by year-end. |

| 3 | Embed a data-literate culture enterprise-wide | 1. Elevate 70% of employees to Awareness and 25% to Proficiency on the Data Literacy Ladder via internal academy courses. 2. Certify 5% of staff as “Citizen Data Scientists” and assign them to mentor at least two peers each. 3. Ensure 100% of business-unit OKRs include a measurable data or AI metric (e.g., “Increase campaign ROI by 10% using segmentation dashboards”). |

Even the best tools and governance crumble if the culture rewards intuition over evidence.

Embedding a data-driven mindset starts with a clear executive narrative, reinforced by visible rituals and reinforced again by the way success is celebrated.

(It may sound like something adults shouldn’t waste time on, but failing to celebrate, you’ll effectively work against the built-in human programming and, consequently, impede progress.)

| KPI | Target | Why It Matters |

| Executive comms referencing data stories | Mentioned in 100% of quarterly meetings | Keeps the narrative front-of-mind |

| Weekly KPI stand-up attendance (directors+) | ≥ 90% average participation | Demonstrates leadership commitment |

| Experiment showcases per quarter | ≥ 6 cross-functional demos | Normalises evidence-based iteration |

| “Insight of the Month” awards issued | 12 per year | Shifts recognition from activity to learning |

| Employee survey: “We use data to make decisions.” | +15 pp improvement YoY | Measures cultural adoption at scale |

Quick-Win Tips

When leadership tells consistent data stories, teams practice data rituals, and insights earn the loudest applause, a culture of evidence takes root, ensuring the technology and talent investments made earlier translate into sustained competitive advantage.

Approach:

| Time | Owner | Activity | Example Content |

| 00:00 – 00:02 | CTO (host) | Kick-off & narrative refresh | “Our primary goal is 15% QoQ ARR growth. Today we’ll see where the data says we stand and what we’ll adjust.” |

| 00:02 – 00:07 | Product Lead | Primary Goal & Adoption Metrics | • Active users (DAU/MAU): 82k → 85k (+3.6%) vs. target 4%. • Feature-usage depth: Avg. 4.9 actions/user (flat). Action: launch in-app tooltip A/B test by Wed. |

| 00:07 – 00:10 | Ops Lead | Reliability & Cost Metrics | • App latency (P95): 430 ms → 380 ms (-12%) after cache patch. • Cloud spend/DAU: €0.048 (-6% WoW). Action: shift image-processing to cheaper tier; ETA next sprint. |

| 00:10 – 00:12 | Data Science Rep | AI Model Health | • Churn-prediction AUC: 0.82 → 0.79 (drift detected). Action: retrain with the July cohort; deliver by Friday. |

| 00:12 – 00:14 | Marketing Lead | Growth Funnel | • Trial-to-paid conversion: 10.8% → 11.5% (+0.7 pp). Action: double down on in-app nudges shown to convert 18% better. |

| 00:14 – 00:15 | CTO | Round-robin: blockers & asks | 30-second shout-outs, escalate cross-team help, confirm next meeting. |

How It Works

| Barrier | Manifestation | Mitigation Strategy |

| Cultural Resistance | “Not my job” mindset | Change‑management playbooks, storytelling |

| Skill Gaps | Analytics requests queue | Micro‑learning, peer labs |

| Risk & Compliance Concerns | Access locked down | Role‑based controls, sandboxing |

| Legacy Tech Debt | Data silos, brittle ETL | Incremental migrations, abstraction layers |

| ROI Uncertainty | Budget pushback | Leading & lagging KPI stack |

A multi-brand department-store group operating 30+ outlets across the GCC had fragmented product, inventory, and customer data locked in separate ERP, e-commerce, and loyalty systems. Marketing teams could not create consistent cross-channel recommendations, and campaign ROIs were flat-lining.

Game plan:

Executive sponsorship plus an integration-first mindset turned messy, siloed data into a revenue engine, demonstrating how a pragmatic “mesh-lite” architecture can pay off quickly.

A multinational logistics-equipment maker was losing millions to unplanned crane and conveyor failures. Reactive maintenance and paper logs led to frequent shipping delays and inflated repair budgets.

Working with services firm American Chase, the company instrumented 1,800 assets with IoT sensors feeding Azure IoT Hub. Predictive models built in Azure ML classified anomalies and automatically triggered work orders through Azure Logic Apps.

Citizen-friendly monitoring dashboards (Power BI) let plant managers experiment with thresholds without writing code. It proves that self-service plus solid data pipelines accelerates value capture.

A universal bank’s lending growth was stalled by legacy, rules-based scorecards that took six months to refresh and lacked explainability for regulators.

Using Finbots AI CreditX, the bank’s risk team (two analysts, no data-science headcount) generated and deployed ML-based scorecards in under one week. The low-code platform auto-documented feature engineering, validation, and monitoring artefacts, streamlining model-risk governance.

Low-code/no-code AI can compress both development and compliance effort, providing “regulator-ready” transparency while freeing scarce data-science capacity for higher-value work.

| Item | Evidence | Lesson for CTOs |

| Executive sponsorship | Retail CEO funded unified data layer; manufacturer’s COO championed IoT rollout; bank’s CRO owned AI roadmap | Top-down mandate clears budget and removes policy gridlock. |

| Iterative rollout | Pilot store APIs, single production line, one lending product = quick wins | Start small, prove ROI, scale in sprints. |

| Trust & governance metrics | Data lineage dashboard (retail), model-drift alarms (bank), MTTD/MTTR KPIs (manufacturer) | Measuring quality and risk builds organisational confidence to democratise further. |

These real-world examples show that when infrastructure, people, and culture align, AI and data democratization move from slideware to P&L impact in months, not years.

It’s always the same question: Is it working?

We put together a compact scoreboard that you, as a technology leader, can use to track momentum, surface early warning signs, and, ultimately, prove commercial impact.

Measure the percentage of employees who run at least one query, build a dashboard, or deploy a low-code model each month.

Rising adoption shows that barriers are falling and bottlenecks are shifting away from the central data team. Target ≥ 50% active usage in the first year, segmented by function, so you can spot lagging departments.

Track how many staff move up the Awareness → Proficiency → Fluency ladder you defined in Section 3.4.

A simple completion metric (“70% of employees passed the Bronze course; 25% reached Silver; 5% earned Gold certification”) gives executives a clear view of cultural change and helps HR align future up-skilling budgets.

Two cycle-time indicators reveal whether democratization is translating into agility:

Leading organisations cut these times by 70-90%. If there’s anything still measured in weeks, it indicates residual friction.

Connect usage to money saved or earned. Pick the dimension most relevant to each initiative:

Tie every major democratization project to at least one of these bottom-line deltas and review them quarterly alongside adoption and speed metrics.

When adoption climbs, cycle times shrink, and financial deltas turn material, you have proof that data and AI are accessible and used enterprise-wide.

The analytics front-end is already shifting from fixed dashboards to conversational interfaces. Gartner’s 2024 Magic Quadrant notes that natural-language and generative query functions are now native in leading BI suites, and early adopters report two to three times more active data users once a chat box replaces drop-down filters.

At the same time, “AI as a colleague” is moving from pilot to mainstream. In May 2025, a survey of 645 engineering professionals found 90% of teams now weave copilots such as GitHub Copilot, Gemini Code Assist, or Amazon Q into daily work, with 62% saying velocity jumped by at least 25%. Similar assistant layers are spreading beyond code, into marketing, finance, and customer-service workflows. They now all use domain-specific copilots that draft, recommend, and explain in real time.

These capabilities, however, will sit inside a tightening regulatory frame. The EU AI Act begins phasing in from 2 February 2025 (prohibitions and literacy duties) and layers on stricter obligations for GPAI models, governance, and penalties by August 2025, with high-risk system rules completing in 2026–2027. For organizations seeking a global benchmark, the new ISO/IEC 42001:2023 standard offers a management-system blueprint for responsible AI operations and continuous improvement.

In practice, the winning playbook is composable. Semantic layers and APIs that let chat-style analytics, task-specific copilots, and compliance controls plug neatly together.

Therefore, enterprises that build for modularity today will spend less time refactoring tomorrow.

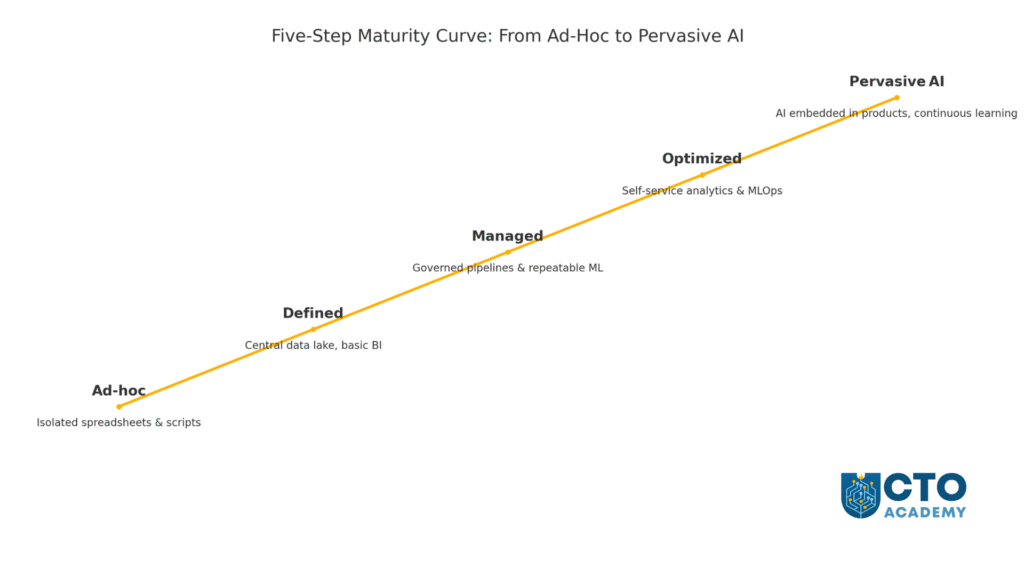

The path to enterprise-wide value follows a clear arc:

Together, these steps turn isolated assets into a shared engine for insight and invention.

The game is on, and the clock is ticking. Gen AI is compressing product cycles to weeks, customers expect real-time personalisation, and the EU AI Act will soon make transparency non-negotiable. What was once a competitive edge is fast becoming the minimum ante to stay in the game.

Therefore, start small but start now. In other words, choose one business problem, stand up a governed sandbox, and empower a cross-functional team to solve it with self-service tools. Measure the gains, harden the guardrails, then replicate.

And remember, pilot-to-platform scaling, when firmly anchored in governance, ensures that a) speed never outruns safety, and b) data democratization delivers lasting, measurable returns.

90 Things You Need To Know To Become an Effective CTO